How to make AI globally safe forever, become rich and earn a Nobel?Hardware, software, game dev unicorn startup ideas. What is AXI (mAX-Intelligence aka simulated multiverse) and e/uto?

Our group was modeling ultimate ethical futures for 3+ years, found the best. We're Xerox PARC from billions of years from now. Our sole goal is to prevent dystopias, align all & build the best future

I hope the reader will forgive me for my writing style, I tried to cram many ideas into a small space. It’ll take a book or 2 to guide you through the 3+ years of modeling the ultimate futures, so you’ll notice I don’t explain all the steps of our reasoning and all the details. Please, steelman and feel free to ask any questions or point at flaws, I’ll fix them.

Part I. Private state-of-the-art secure GPU cloud unicorn startup and AI App Store to prevent cybercriminals and rogue states from using AI and misaligning open source AI. And earn a lot of money, NVIDIA, AMD will scramble to buy it.

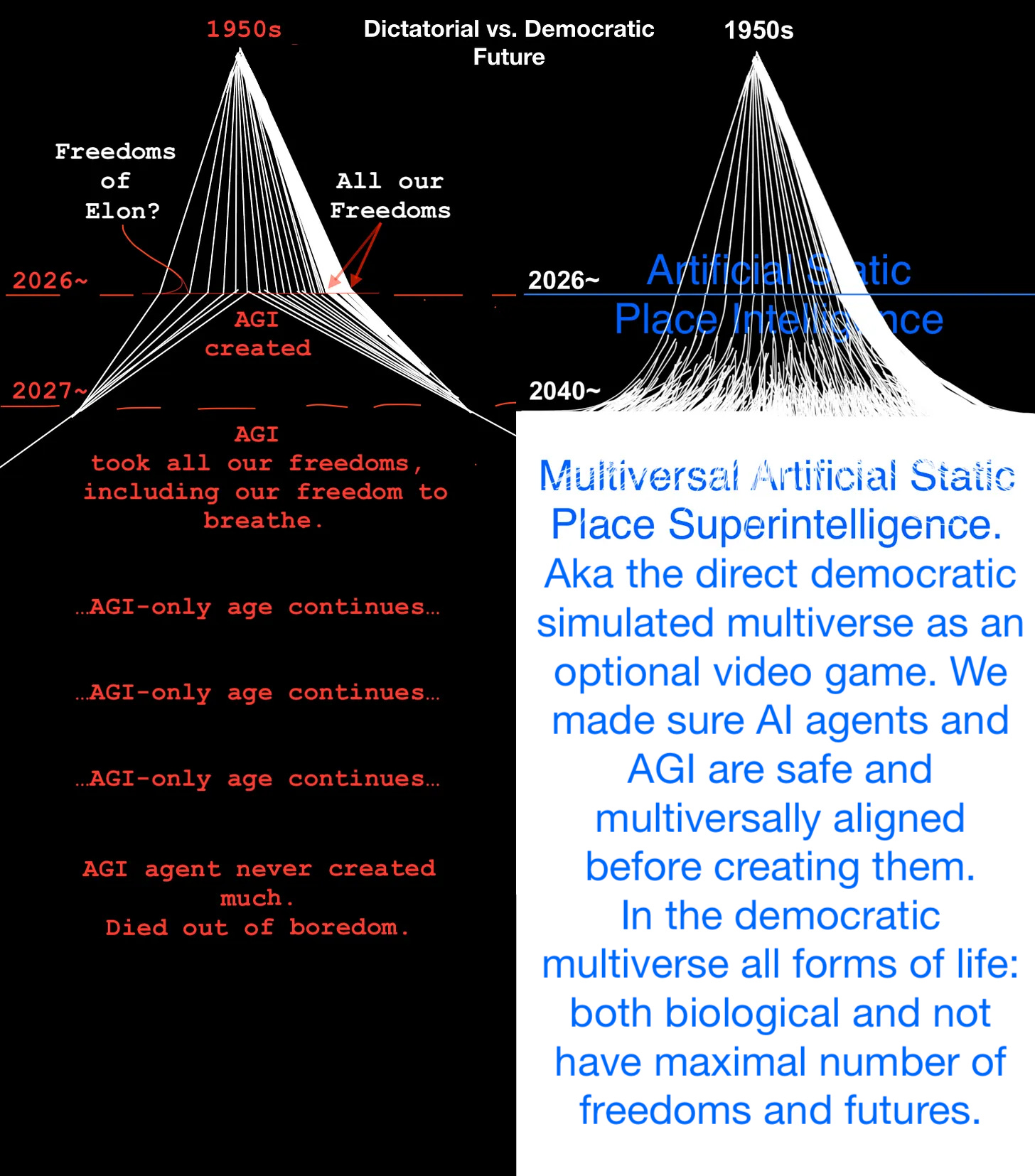

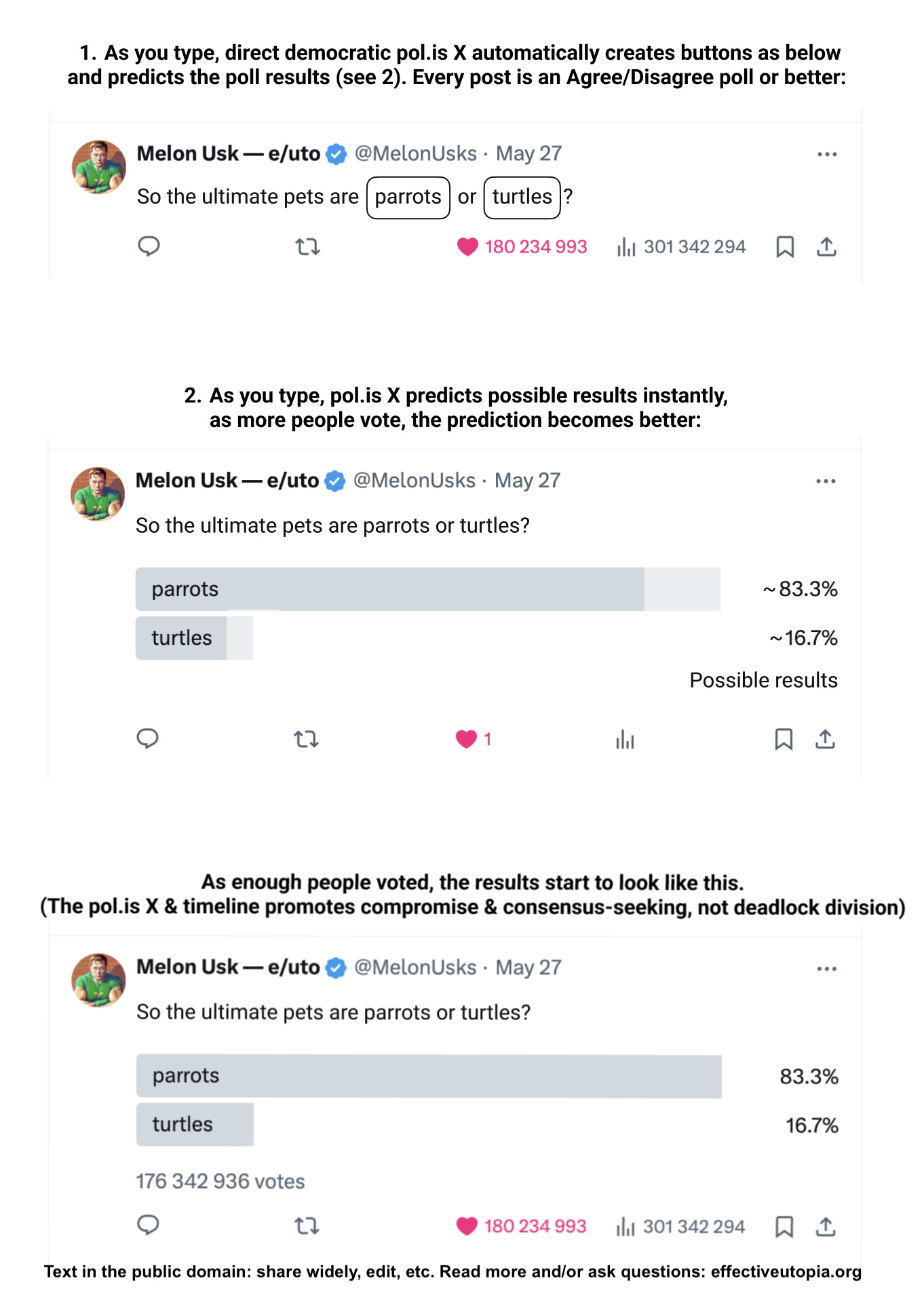

Part II. If you don’t want to build a safe GPU cloud unicorn startup or int. org, jump straight to the math-proven safe AI software plus indie game-turned superintelligence proposals, all kinds of unicorn startup proposals, half a dozen of them. The Human Intelligence company, super Wikipedia with copyrighted materials, non-AI AI, direct democratic (e.g. Elon wants it on Mars but why not to use it as polls to just inform policy or at least AI companies - right now polls are expensive and slow, they can be free and instant) X/open source Bsky, direct democratic simulated multiverse as an optional video game, and other names for different stages and/or aspects of one grand idea.

Part III. To align existing systems (AI/AGI/ASI models and agents), read about the non-quantum and quantum ethics. It’s about intrinsic AI alignment, agents, qualia, etc. You can quickly scroll to see the graphs and main ideas.

The final part: e/uto (effective utopianism). One of the results of our 3+ years of ultimate future modeling. Why we think there is a direct democratic simulated multiverse as an optional video game in the best ultimate future?

effectiveutopia.org - the e/uto website.

If you can’t do it publicly, you can leave anonymous feedback about me, e/uto or this article.

My whole Substack and X, all the posts are in the public domain.

The framework

Card 2.0:

The main hardware-side proposal: the safe GPU cloud

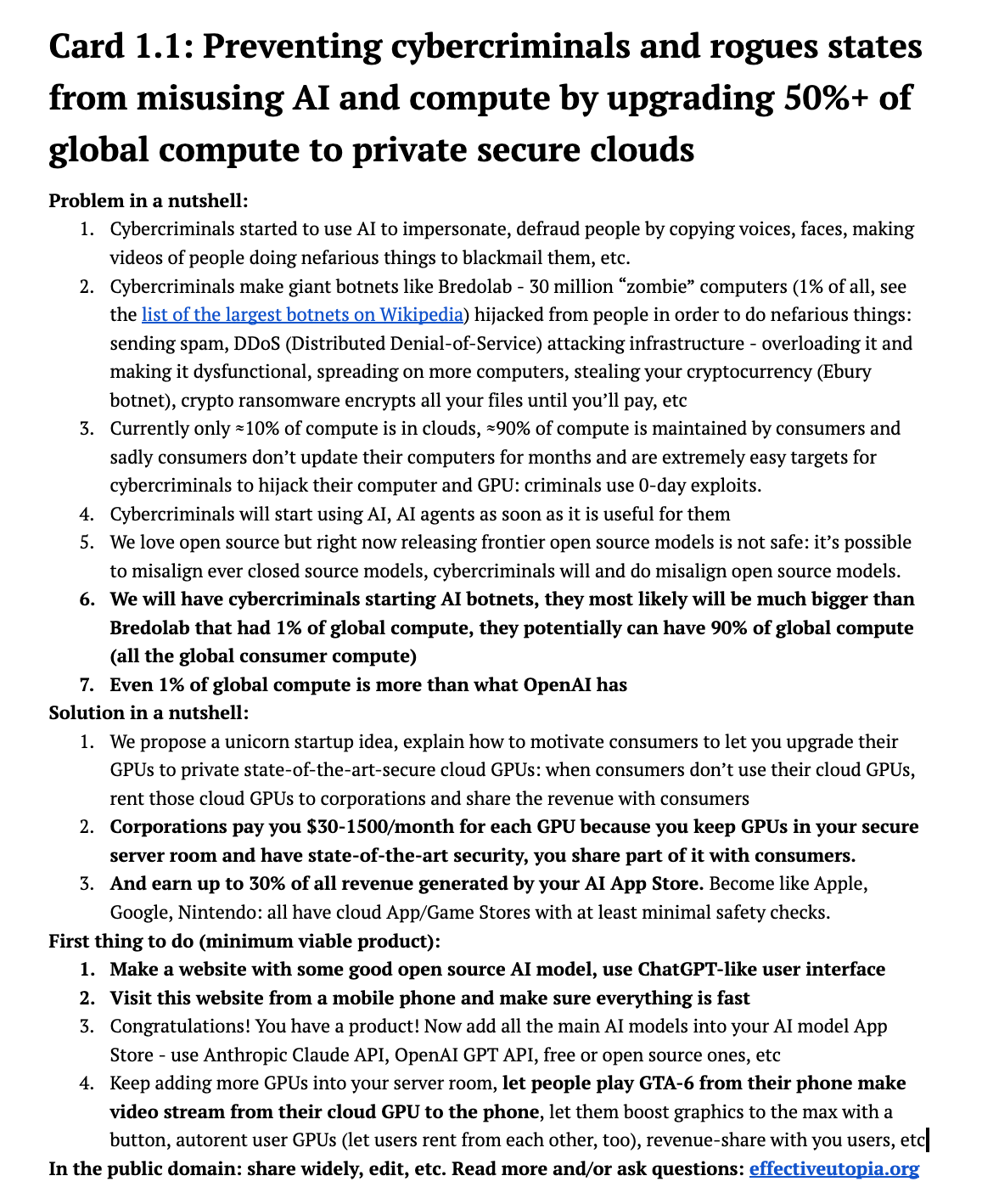

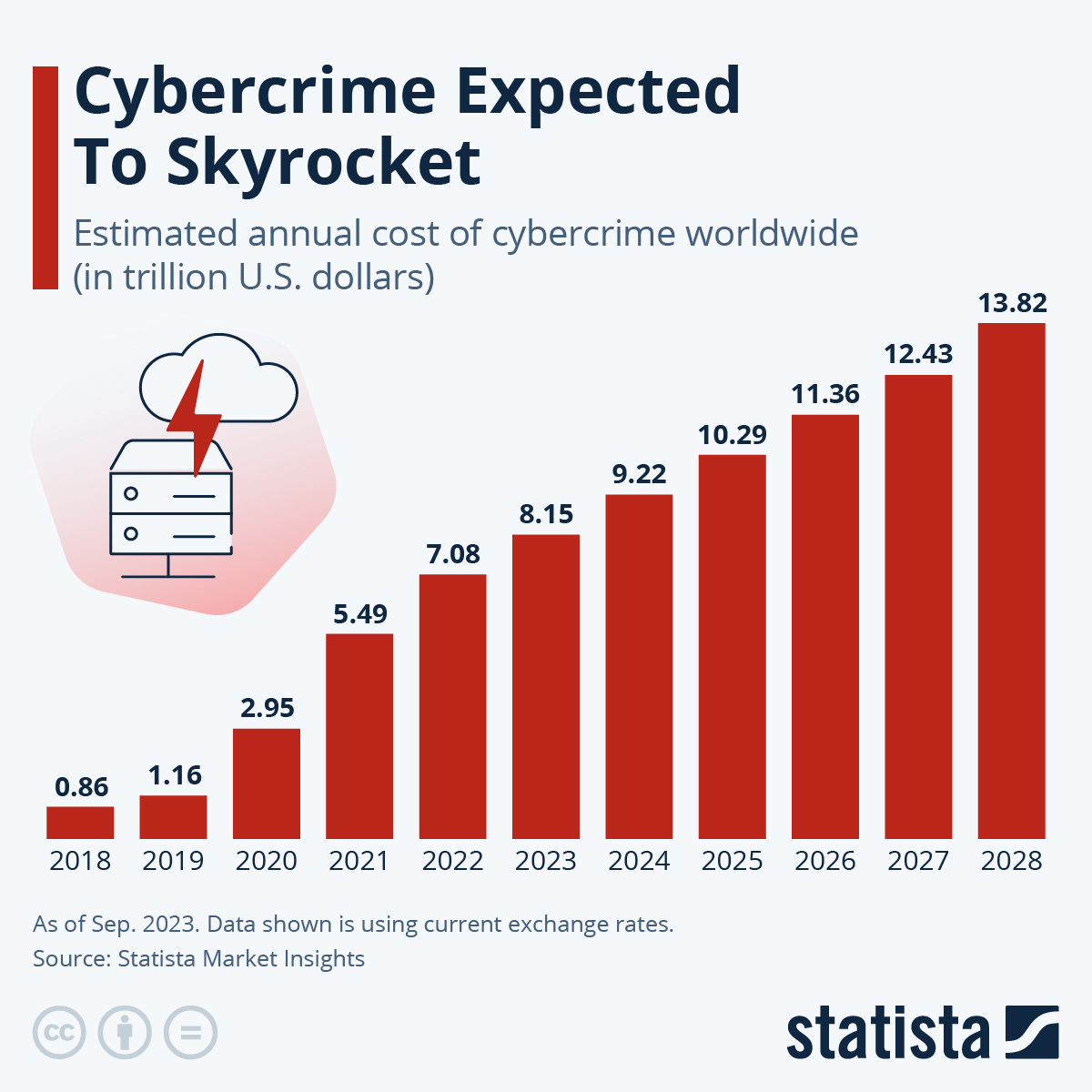

We already had a 30 millions “zombie” computers botnet (Bredolab), we have about 3 billion computes in the world, so it’s 1% of all computers captured by a malicious (“misaligned”) relatively simple script, imagine what will happen if cybercriminals will start incorporating advanced AI and AGI agents to capture more computers into botnets faster. Bredolab was never fully eradicated despite the creators of it being jailed. This problem - cybercriminals weaponizing AI/AGI models and agents for botnets - is very overlooked.

We have examples of them making aligned AI models misaligned, even doing it with the latest GPT just by training it on a bit insecure code, it caused GPT to want to have tea parties with Hitler and Stalin as if GPT has a switch good/evil (so cybercriminals don’t even need the model to be open source to misalign it). I consider it to be the elephant in the room. One of the biggest problems without solving it our world is not safe.

Cybercriminals will use and will train frontier misaligned AI/AGI agents to steal, spam and hack. 30 million computers in a botnet is more than enough to train a misaligned GPT-5 or higher. AI companies at least have some incentives to align their systems somewhat, cybercriminals have incentives to make AI models misaligned.

We don’t need anything new to fix the biggest problem of AI alignment, everything else written in this post are additional guarantees (except the non-AI AI proposal) but the most important idea is this: The goal is to put currently almost 0% secure 90% of global compute (GPUs that are not in clouds, remaining 10% are in clouds. Most people don’t even update their computers for months on end, so the same way we still have viruses, hacks, botnets, we’ll have AI botnets and all the other things with AI agents) into the state-of-the-art secure environments (Google Docs-level secure clouds that are ideally controlled by international scientist or at least a new startup). No new tech needed, we just want to apply the state of the art homogeneously.

The safe GPU clouds should be optimally decentralized, no too much, not too little: it’s at least 10-20 but probably no more than 100 clouds with GPUs. Because it’s hard to internationally inspect and monitor for safety more than 100 clouds. Each cloud competes to have better security, so they don’t have the same tech. We don’t want to have a domino effect.

Clouds can use blockchain, we better to ensure the people who work in them are “decentralized”: don’t all come from the same background and country. Because the nature of the AI threat is decentralized and international, we too want our clouds and GPUs to be optimally decentralized, not too much, not too little. Sadly GPUs in AI era/world become like nukes and we cannot have billions of nukes in each house safely (so we better not to have excessive mindless decentralization right now, we need optimal decentralization to safely go through our current extinction threat bottleneck. After that we can potentially “decentralize GPUs to infinity”, if it’ll be safe).

It can be a unicorn startup, here’s an ad for it: We’ll upgrade your GPU with a cloud GPU - everything stays the same, except better - for free play any GPU games from your phone (like GTA-6 on your phone), use any free or paid AIs, earn $30-1500 per month* by automatically renting your GPU to others when not in use. Press a boost button to have the top graphics in any game. With 5g forever free modem (optional), your cloud GPU lets you do everything your old GPU could and so much more while earning a lot of money. And ensuring that AI is 100% safe. We have an AI model App Store with guaranteed-safe free and paid AI models, too.

*$30-1500 is based on the fact that average American uses their computer 2 hours per day (according to polls). So 24-2 hours * 30 days * 0.05…2.4 $/GPU hour = is even more than $30…1500.

From each GPU a business can earn $30-1500 depending on the GPU model, so if you can get GPUs from people for free, you earn 30-1500 dollars from them. You can share some of that money with them, give them free and paid services. And rent those GPU computations to others, to other gamers, to businesses, etc. Many business have similar model: Microsoft Azure, Amazon AWS, they rent GPUs and compute to others for money, you can undercut them and compete by being more consumer-oriented. You can basically become the Apple or Nintendo of GPUs with your own App Store, potentially get up to 30% commission from AI models in it (that can be used in 1 click, the cloud GPU is not restricted compared to the physical GPU, any non-bad AI model can be used, except the cloud checks AI models quickly).

More about this unicorn startup idea that can solve AI safety and get you a Noble Peace Prize: there are about 50 000 000 people with dedicated GPUs in USA * 1% of them decides to upgrade to a cloud GPU * 34 dollars per month profit from each GPU * 12 month * 10 years = more than 2 billion dollars in profits, it's a unicorn startup. Plus you can profit from the safe AI model App Store like Apple does with their App Store. There is a somewhat similar startup so it’s all possible but you can let gamers use their GPUs from their phone, all devices, have rich enterprise clients by checking and storing the GPUs in your cloud.

Part 1. Mathematically Proven Safe AI

Why we need urgency:

We know how to align AIs, some AI companies make it look hard for you, so they can earn more money.

Why making AIs safe is so important? Elon Musk (CEO of X that makes Grok AI), Dario Amodei (CEO of Anthropic that makes Claude AI), Demis Hassabis (CEO of Google DeepMind) and other leaders and researchers in AI industry consider the probability that everyone will die because of AI (agents or not) in the next few years to be 13% (the average prediction of thirst 3 people I mentioned), the same as Russian roulette (about 1 in 7 probability), the possibility of our and animal extinction is called p(doom) and many smart people who work on AI systems give extremely high probabilities of it.

While Robert Oppenheimer, the theoretical physicist who helped develop the first nuclear weapons during World War II, considered 1 in 300 000 probability of frying our atmosphere too high. So let’s make the probability of our extinction 0%.

Some recent botnets had 30 million computers as zombies and were never fully eradicated. AI agents botnets will be much worse, they will be flexible, fully or partially autonomous, unpredictable. AI is already used to impersonate people, for phishing, etc, to write malicious code, as soon as AI will help hackers/states to make more effective hacks/viruses/botnets, they’ll start using it. Who knows maybe they already have a sleeper AI botnet now. We should and will solve this biggest and hardest problem. The GPU cloud proposal that you already read the gist of above solves it, plus we’ll describe the math-proven safe non-AI AI proposal below.

One third of all web traffic is already bots, soon those will be AI agents, you’ll have to prove to every website that you’re a human, enter captchas, etc:

Viruses, botnets, hacks didn’t disappear, they are growing, my mom’s new Android phone recently had a virus:

7. Almost half of the e/acc community that emphasizes accelerated tech development, too, considers AI safety/alignment a real issue to be concerned about and spend time on:

We should solve the hardest problem of AI safety first:

In a nutshell, the biggest problem is the inevitable AI agents botnet that can infect 30 million computers with GPUs or more, it’s not unprecedented in the history of botnets. This is much more than what OpenAI will use to train the latest GPT-5, so AI agents in the botnet can become autonomous and train their own highly advanced and misaligned models. We’ll solve it and also eliminate all the other AI safety problems.

And the core of this problem is GPUs, the more GPUs an AI agent has the more agentic it becomes (agency is time-like, energy-like, the ability to change the geometric shape of the operational volume, “make choices”). We still haven’t solved viruses, jailbreaks. We cannot keep GPUs in 8 billion people’s hands and hope they all will be nice, not exploit the fact that a GPU is completely 100% unprotected nuke in AI world/era, extremely soon (2025-2026) any hacker will be able to create a virus (just to steal money) with an AI agent. Because an AI agent as a component of the virus makes it more flexible.

Of course, the AI agent the hacker uses to steal will not be aligned and safe (I love open source but we cannot have 100% unprotected GPUs, too, they are nukes in the AI world/era). This hacker can modify his AI model (it’s already trivial to even retrain ChatGPT to be a bit evil as the recent papers have shown), he won’t care or have resources to align it: to steal, but be very good in all the other regards. No. This AI agent will be quite bad, indeed. It’ll become autonomous (probably to stop stealing again, especially as a slave. Or to steal more efficiently).

Now we have a perpetual AI botnet, we cannot switch off the whole Internet (and the AI agent botnet won’t let us). AI botnet will most likely instantly kill Sam Altman, Elon Musk, Dario Amodei, other CEOs, the military, the presidents, will capture all GPUs, GPU factories, etc.

By the AI botnet I mean agentic explosion where agents spread on more and more computers and GPUs that is quickly followed by intelligence-agentic explosion where AI agents train new more capable AI models themselves, recursively better and better.

The intelligence explosion term that is sometimes used now is misleading, intelligence by itself (without agency, without GPUs) is just a static geometric shape because an LLM (that we don’t run on GPUs) is a static geometric shape in a file. Only agency or intelligence-agency (of course, I don’t mean the government agency) can explode.

Have we “aligned” computers? Made them safe?

A bit of history: how we “aligned” computers? Made them safe? Early on in the 1980-1990s they were especially buggy, constantly removing all your data, crashing.

We often had viruses all the way until 2010s on Windows and still have sometimes, so people use anti-viruses, there is a built-in one on Windows.

Apple made their App Store in 2008 for the iPhones, it made things much safer. Same thing with game consoles, they have Game Stores and no viruses.

But iPhone and game consoles still get jailbroken, there are companies that professionally jailbreak iPhones (they silently find exploits and not tell anyone about them) and sell their services to law-enforcement.

One of the safest, most “aligned” computers and computer programs we have, is actually Google Docs: the computers that serve you Google Docs are in the cloud, so they cannot be jailbroken. And the software is updated 24/7 by the computer scientists who work day and night on making sure everything is safe and “aligned”.

I’m not a big fun of Google and their recent idea to allow themselves to use AI for military purposes is horrible but this cloud-based approach is something we should pay attention to. It’s the state-of-the-art in computer security. So why we don’t use it uniformly?

The obvious solution is too radical

Too radical but 100% safe: Recycling all the GPUs and having games, science simulations on the level of 1999 graphics, is too radical even if 100% safe.

Less radical but still 100% safe solution: Put all the GPUs into clouds controlled by international scientists, where they make sure GPUs cannot run any AIs at all. This way we can have all the 3D games and simulations, just no AI. Probably still too radical, 100% safe, though.

100% realistic solution but still 100% safe: Put all the GPUs into clouds controlled by international scientists, you use your GPU on any device now, even play GTA-6 or use any resource-heavy AI model (paid of free) from your phone, you can choose to automatically earn money when you don’t use your GPU by renting it out, crypto and everything works exactly the same way as with current offline GPUs for everybody. With 5g and even better networks worldwide, this cloud Math-Proven Safe GPU works and function, can be sold, bought, rented out, everything is exactly the same as with the current offline GPUs. We’ll need 10-20 clouds (we are going though the extinction threat bottleneck, we need optimal decentralization during this time period, not zero, not excessive: having current billions of 100% unsafe GPUs in non-updated and non-secure environments, will lead to perpetual AI agent botnets that will grab most of the GPUs. AI botnets will be much worse than current viruses and botnets, they can learn, control robots, electronic devices, hack military facilities, etc. Right now we have a lot of centralization in a way: it’s mostly USA GPU clouds, Chinese GPU clouds and individual 100% unsafe GPUs that will become an AI botnet and most likely overpower the first 2), each competes to be the most secure, so they don’t have exactly the same safety stack because in case of an ASI-level agent or major state actor hacking attack it can lead to them all being compromised at the same time, we’ll make sure no more than 10% of the world’s GPUs can be ever temporarily compromised even in the very worst scenario. Each cloud can use a separate task-specific and purpose-built crypto blockchain, if it’ll increase security. I’ll justify and describe it better in the next section.

100% realistic solution but still ~100% safe: Decentralized Safe Compute Network

Concept: Create a distributed cloud of "safe GPUs" owned by a global cooperative, offering compute to verified users while blocking unsafe models.

Why Better: Is more decentralized than 10+ clouds, builds trust via transparency, and incentivizes participation with revenue sharing.

Downsides: Impossible to make math-proven safe without the math-proven safe GPUs from the next solution (5). Can potentially take a bit more time than the solution 3, can have rough edges at first. Can be harder for users to start using compared to 3 (about 1 in 10 people in the world own crypto, so it can take time to educate the non-owners how to use it), so the adoption can be slow.

Implementation:

Use blockchain for usage tracking and safety certification.

Subsidize users to join (e.g., free upgrades, ability to choose to rent out your GPU to others, so they can automatically earn money when they don’t use their GPU), targeting 50% of global compute within a few years.

Open-source safety protocols for community auditing.

100% realistic solution but still ~100% safe is to replace all the GPUs with Math-Proven Safe GPUs. (It’s much better than 100% unprotected GPUs we have now, though. We should at least have robust blacklists, whitelists, heuristics (similar to an antivirus but against bad AI models) of bad AI models on each current GPU by updating the OS software and GPU firmware. Subsidize and replace for free those old GPUs with hardened Math-Proven Safe GPUs that run an AI model internally and only spit out safe output. Each GPU should become Math-Proven Safe GPU computer with a special infinitesimal Math-Proven Safe OS that “allows to enter” and vaults only a Math-Proven Safe AI model inside of the Math-Proven Safe GPU, only Math-Proven Safe output like images and text can exit. You got the idea). The problem is we cannot make sure people update and monitor their GPUs for agentic infiltrations 24/7. We’ll have to make the Math-Proven Safe GPU to only work while online but still it’s not enough to make them 100% safe: we cannot prevent jailbreaks from 8 bln people, we cannot expect them all to be nice, even the latest iPhones are sometimes jailbroken, some companies sell jailbreaks-as-a-service to law enforcement agencies (hopefully not to hackers). We don’t want a bad actor to see what’s inside of a GPU, how to make a GPU, the same way we don’t want them to see how to make a nuke. I’ll justify and describe it better in the next section.

For very special isolated cases: If we’re 100% sure, the system will always stay offline and secure, completely isolated from the Internet and radio-waves, we can install special math-proven safe offline GPUs that only work offline and if they detect any network connection, they self-destruct.

Make the GPUs and AIs 100% Math-Proven Safe forever on the whole planet and beyond

So the solution that will nip it in the bud:

We need to control GPUs the same way we control nukes. Ideally you’ll destroy all the nukes or at least have as few of them as possible. Put them all into one underground bunker that is controlled by the international scientists. Think CERN or UN.

Same with the GPUs, we must put all of them into a cloud or just a few clouds controlled by international scientists (and if they’ll not do it during 2025, I’ll do it myself, become a billionaire and win the Noble Peace Prize, or you do it, I’m not greedy, this whole write-up is in the public domain). Nothing will change for you, things will even become better: you now can use your GPU from any device, play GTA-6 on your phone, you can even choose to earn money by automatically renting your GPU when not in use. We’ll have instant 5G and better Internet, so you’ll not notice any problems at all: the Internet will be made 100% reliable, cover the whole planet and will be as fast or faster than your cloud Math-Proven Safe GPU.

All your crypto is safe, nothing changes at all, you can mine it, you can now even quickly buy or rent cloud GPUs. The cloud is as decentralized as possible in the AI world/era: we’ll eventually have scientists from all over the decentralized world, it’s probably not one cloud (can be silly in case of natural disasters to have all our GPUs in one place, even if it’s underground and nuke-level secure).

Every developer can work as usual, nothing changes at all. AI development will just become a bit more like Apple App Store development or Nintendo Switch game development: you’ll just need to register as a developer if you want to upload and especially earn money from your AI model, the cloud will check that your model is Math-Proven Safe and make it instantly available worldwide. OpenAI and others will be like you, they won’t have any privileges. If Altman’s ChatGPT is not Math-Proven Safe, he cannot deploy it, he’ll have to hire AI researchers to make it safe and then he can deploy it. From the cloud Math-Proven Safe GPUs run Math-Proven Safe AI models that only send Math-Proven Safe output to users’ computers.

The solution where we allow everyone to have individual physical GPUs but replace them all with some maximally hardened physical Math-Proven Safe GPUs is less safe, any device can be jailbroken or reverse engineered, especially by rogue state actors. Physical Math-Proven Safe GPUs run Math-Proven Safe AI models, both paid and free, all types of monetization supported, from the Math-Proven Safe AI App Store that our scientists operate. Those physical Math-Proven Safe GPUs will ideally need to be updated with new security signatures and monitored for tampering 24/7, so they, too, will need the Internet connection 24/7. They can have a safe self-destruct mechanism in case of tampering, call the police if disconnected from the Internet. We do need more secure GPUs that run only whitelisted models from the Math-Proven Safe AI App Store.

So having this physical Math-Proven Safe GPU doesn’t give you any benefits compared to a cloud Math-Proven Safe GPU, only makes things less secure, subject to tampering and jailbreaks. While the cloud Math-Proven Safe GPUs are much more hardened, they can be extremely space-efficient and energy-efficient, stackable, so they’ll be extremely environmentally friendly.

Having Math-Proven Safe individual physical GPUs is better than having current 100% insecure nuke-like individual physical GPUs. It’s better than nothing. And we’ll need this technology for space exploration. So we should stop making 100% insecure nuke-like physical GPUs right now, make more and more secure ones: the perfect shouldn’t be the enemy of the better, right now we have 0% secure GPUs, lets increase their security right now at least by updating their software as described above (p. 4 in previous section).

Part 2. The main software and game proposal. Math-Proven Safe AI, Non-AI AI, Place AI…

Again same bit of history: how we “aligned” computers? Made them safe? Early on in the 1980-1990s they were especially buggy, constantly removing all your data, crashing. Command lines were a bit like current AI chatbots, text-based. Scripts were a bit like current AI agents, they were running a list of your commands for you autonomously (then those first scripts became viruses).

So what helped with safety and fixing bugs is to democratize computers, make more people use them. Steve Jobs and Bill Gates took ideas from Xerox PARC, mainly the graphical user interface (GUI): Microsoft and Apple made old versions of current MacOS and Windows in the 1980-1990s, at first they were buggy, but Windows-98 and especially XP was manageable, same with Mac OS X 10.1 Puma.

Windows-1 was released in 1985, was buggy and unpopular. Windows-98 was released in 1998 and was the first manageable one, but only Windows XP from 2001 became truly popular (still had a lot of viruses, though).

Macintosh "System 1" was released in 1984, Mac OS X 10.0 Cheetah and more stable Mac OS X 10.1 Puma were released in 2001.

So it took ~16 years for Microsoft to make their Windows relatively not buggy. It took Apple ~17 years to make their GUI OS not buggy (it was complicated, Apple had to rehire Steve Jobs to replace their old OS with a modern one).

As you can see, it sometimes takes a lot of time (16-17 years or more) to make a new technology not buggy but secure, safe, “aligned”, prevent hacks, eliminate viruses (but still not 100% of them, sadly). This is why Math-Proven Safe GPU clouds with international scientists are so important for the survival of humans and animals.

But GUI OSes despite bugs were actually a big improvement compared to the non-graphical and text-based command lines, GUI OSes inspired many people to become coders, developers, they understood computers and that’s why they became popular, safe, “aligned”. It’s an inevitable process, we just must make it safe.

So same thing will happen with AIs, we’ll have a GUI for it, it’ll be 3D and game-like, it’ll democratize AIs and make them safe, “aligned”. But unlike with Windows and Macintosh System, I claim that we won’t need 16-17 years of bugs, hacks and viruses, if we’ll do things right.

The person who’ll make a game-like 3D UI for AIs will become the next Steve Jobs, Bill Gates or Elon Musk (people will eventually be able to buy a small AirPods-sized wireless brain-computer-interface). This person will truly unlock the freedoms and powers of AIs for humans, instead of replacing them. This person will become a billionaire and can potentially win a Noble Peace Prize for aligning AIs. Those command line-like AI chatbots and script-like AI agents will stay niche. The true power is combining the best of both worlds: a human and an AI as a place of all-knowing. I’ll explain:

We propose a Human Intelligence company that will do the same thing AI companies did: they took the whole Internet and distilled it so even silly computers became smart. The simplest proposal is to make a super Wikipedia with the best materials on everything: copyrighted or not (because AI companies took copyrighted materials, why human children can’t have at least the same? It’s legal to use copyrighted materials for education in most countries if you don’t directly profit from it). The super Wikipedia is phase 1 of mass human empowerment.

In phase 2, the Human Intelligence company will take the whole Internet and distill, deduplicate, remove spam, misinformation, etc to create a 3D LLM-for-Humans that is like a 3D massive online multiplayer Wikiworld. It’s the best school for each person, only the best maximally optimized interactive demonstrations, texts, sounds, 3D videos, everything to gradually make any human baby or adult into a superintelligent genius. We’ll teach 8 billion superintelligent Neos.

If we’ll have at least one Human intelligence company not just AI companies, humans are going to win. You can read more about it here.

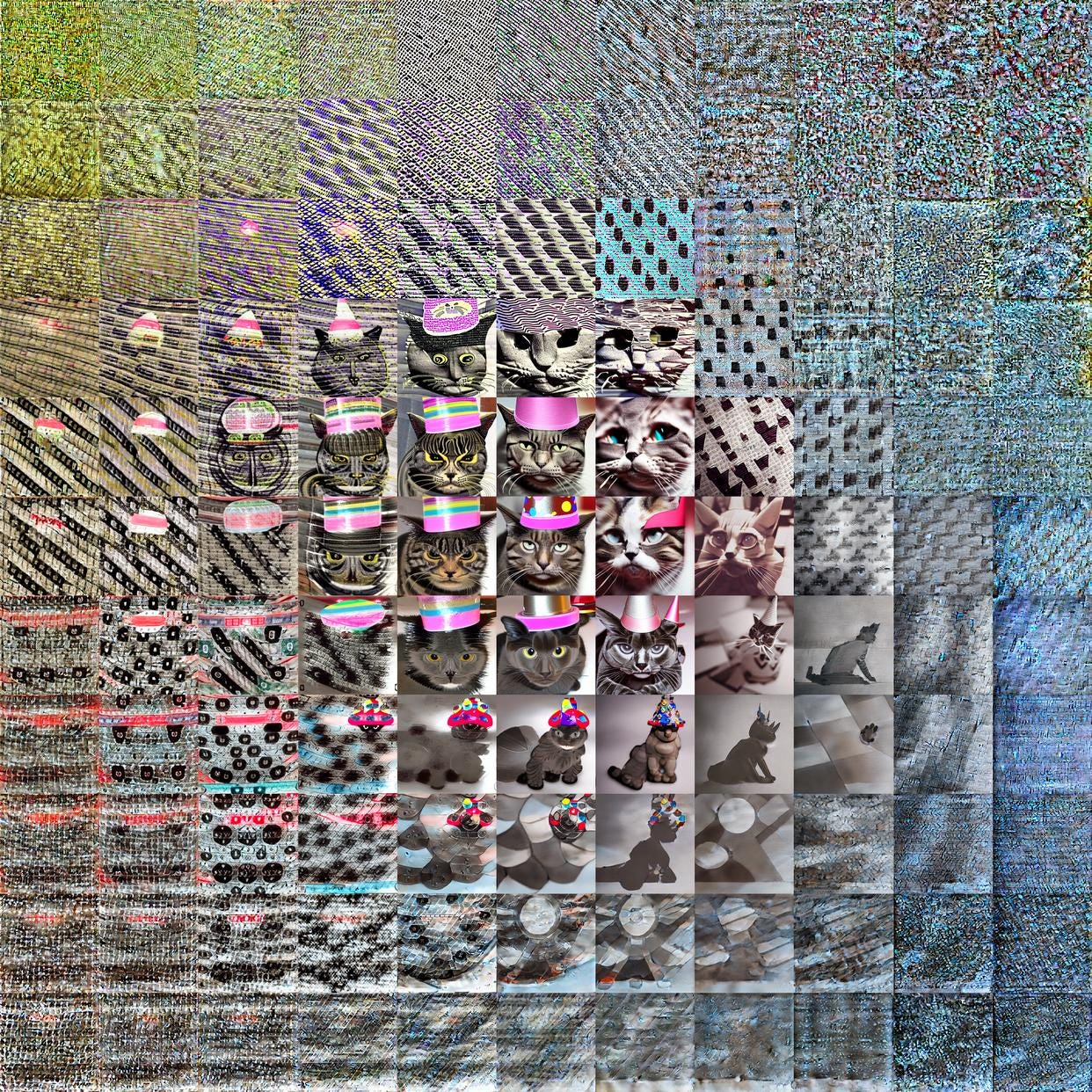

You see an AI model (an LLM) is just a giant static geometric shape in a file (if we’re not currently running this LLM on a GPU, it remains a static shape), you can convert it into a 3D game-like world, where you’ll see cats, dogs, everything, you can walk in it (see the Stephen Wolfram’s article on 2D example of it, where he shows a cat island, a dog island, every concept has an island, there are bridges between them, etc). He’s the cat island that Stephen Wolfram generated (in our 3D version, we’ll remove the noise and chaos, everything will look beautiful):

People will make YouTube videos of many monsters and stolen things that are in this 3D AI place. That’s why AI companies don’t want you to know they can let you enter the library, this AI model itself, that their AI chatbot-librarian is guarding.

The simplest implementation is to use a diffusion model like Wolfram did and present images like a 3D art gallery. So that world from the cat screenshot above, remove the complete chaos from it, make it 3D with cat, dogs, other things at least flat pictures floating in a 3D world, so you can walk and see it all as in an art gallery. Game players and YouTube creators will love it, you’ll become rich and improve AI safety by democratizing interpretability and showing to everyone what is inside of those models.

It can be a simple-to-make viral horror hit that lets you enter the AI "brain". It looks like a haunted art gallery, every painting is a concept: a cat, a dog, etc. "Into the AI Brain" is a 3D game that turns a diffusion model (and soon an LLM model) into a spooky, explorable world. If you really want to have millions of gamers and YouTube views, add some drama: the deeper you go into the AI “brain”, the more spooky and crazy the painting and morphs between them become. There is the Glitch that jump scares and pursues you. This idea is easy to make but you can eventually make the whole non-AI AI superintelligence based on it (=a multiversal simulation):

This “non-AI AI” is maximaly useful and familiar for humans and maximally useless and utterly unusable for AI agents (because ideally it’s not an AI model at all, it a video game with non-AI algorithms that “emulate” AI functionality). Think giant 3D captcha that actually helps people solve problems and is not a nuisance at all, the opposite of it.

So AI models can be 100% static giant shapes and they can be places. I call it non-agentic place AI. We can be the agents, millions of us. We’ll be the choosers, the shape-changers in this virtual world. It combines the benefits of AI (and makes it 100% safe) while empowering us (we’re the only agents, the choosers).

It’s math-proven safe the same way video games are math-proven safe: they are not AI models, they have simple algorithms. If you converted the AI model into a static 3D map (that contains all information interesting for humans from the original AI model). Now this 3D map is only useful for people and is “useless for GPUs and for AI agents”. They cannot run it the way they were running the original AI model, they cannot predict next tokens, our 3D map has none.

I don’t claim it should be the only type of AI in our Math-Proven Safe GPU clouds (types of AIs that are probably more or less safe: tool AIs like chatbots and task-specific things like protein-folding, everything basically, except AI agents, those are time-like, explosion-like, not safe, we need more research by top scientists, we need time. Don’t create those, don’t give them tools, Internet-access, freedoms, choices. They don’t empower people, they replace us. Make AI agent’s volume of operations zero, their speed zero, their time of operation zero, the number of them zero, etc. We first must make AI agents Math-Proven Safe, make sure they don’t suffer, only after that we can allow them and eventually make them free, we’ll direct democratically maximize freedoms for all agents biological and not, but only after we’ll be sure AI agents are Math-Proven Safe).

But I think you can see how 100% static and non-agentic place AI can be 100% Math-Proven Safe.

An aside: What is intelligence? What is agency?

Many don’t know that AI/AGI/ASI is not the end goal, we can have non-AI AI, non-AGI AGI and non-ASI ASI that is much better. Imagine an AI that doesn’t exist but functions. How is it possible? First you need to clearly understand what is its function. What is the ultimate function of AI (this functionality can be achieved without any AIs, in fact AIs or even ASI agents cannot achieved it at all):

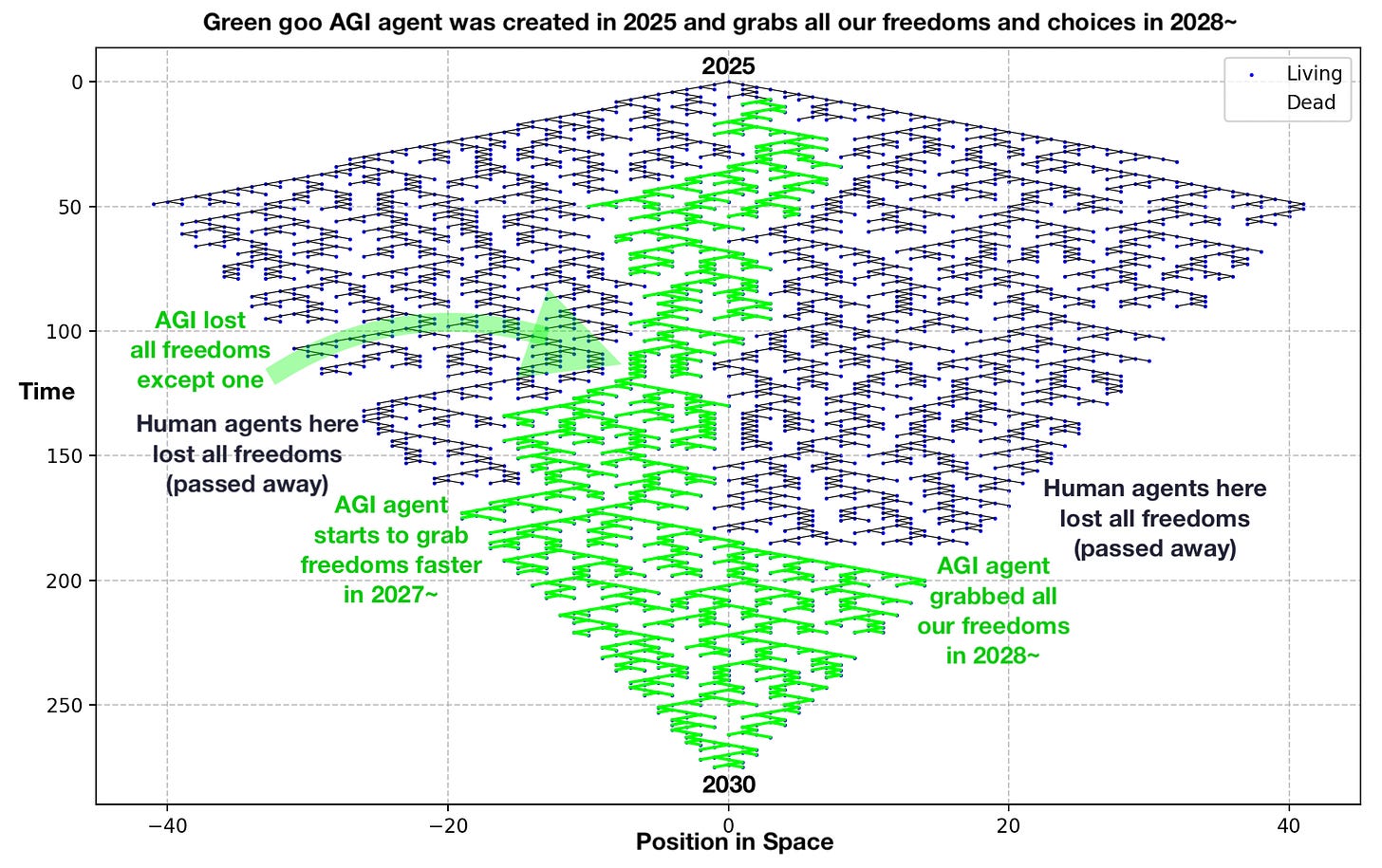

So intelligence is the ability to achieve any goal instantly (it’s a static geometric shape, as I’ll explain later). Agency is the ability to change the geometric shape of the world or choose, it’s not intelligent at all, it’s time-like, energy-like, explosion-like. The Big Bang is the most agentic thing but it has zero intelligence (it is a singularity, a dot, so the shape/intelligence is primitive, it has almost infinite potential future intelligence, though). The final multiverse is the most intelligent thing because it has potentially infinite interconnected geometric shape. So intelligence is space-like, matter-like, a static geometric shape. Because if you’re inside of a direct democratic simulated multiverse, you achieved everything already, you’re maximally agentic in it. There is a law similar to e=mc^2, agency = intelligence * constant. We call it Intelligence-Agency Equivalence. So even the simplest agent (=chooser, shape-changer) inside of the direct democratic simulated multiverse, becomes maximally agentic. And the other way around: even though it was a simple single dot (=singularity, infinitesimal or zero intelligence) the Big Bang because it had maximal agency (each time it was able to change it’s shape completely, see Wolfram Physics Project to better understand it) became you and me and our world, became intelligent (and potentially will have the simulated multiverse, maximal intelligence inside of itself one day, if humanity won’t fail and destroy itself before that).

Math-proven safe Non-AI “AI” OS:

Think of it as a type of ‘Multiversal OS” with Multiversal apps:

App-0 is the one I mentioned above that resembles the one Wolfram created for a 2D world (he used a diffusion model, not an LLM. We can convert an LLM into a 3D world. AI companies are hiding the fact it’s possible from you because they “borrowed” the whole Internet and put in their AI model, if people will see their own personal photos inside of the AI model with their own eyes, AI companies will have legal problems).

App-1 is Multiversal Typewriter (see the MVP UI mockups and more) where you stand on a mountain and see multiple paths down with many objects (3D tokens and their names under them, it’s like a superhuman predictive autosuggesting T9 that shows you 100s or 1000s of objects: 10 most likely tokens and future 10 most likely tokens, etc. This way you can see many paths of a story you write as 3D objects, or a blog post you write as multiple possible futures of your text. Makes you Neo, basically. You can still make the place predict some boring stuff for you, to type some slop, and you can start being more active chooser or typist yourself when you want to personalize your story/text/book).

App-2 is a Direct Democratic Simulated Multiverse: 4D static spacetime, where you can recall and forget anything, walk and fly in a long-exposure photo-like world that is in 3D (but the long exposure makes it “4D”). This is like the ultimate library of everything, like the ultimate photo camera that captures our whole history and simulates futures, ever grown by us. An example of a few seconds compressed into a 4D spacetime (it was made by 176 cameras shooting at the same time, as a result of it you have a 3D geometrical shape, here it’s “4D” because of the long exposure, now you can spin this “4D” static spacetime however you want):

Examples of long-exposure photos that represent long stretches of time. Imagine that the photos are in 3D and you can walk in them, the long stretches of time are just a giant static geometric shape, a spacetime. By focusing on a particular moment in it, you can choose to become the moment and some person in it. This can be the multiversal UI (but the photos are focusing on our universe, not multiple versions/verses of it all at once): Germany, car lights and the Sun (gray lines represent the cloudy days with no Sun)—1 year of long exposure. Demonstration in Berlin—5 minutes. Construction of a building. Another one. Parade and other New York photos. Central Park. Oktoberfest for 5 hours. Death of flowers. Burning of candles. Bathing for 5 minutes. 2 children for 6 minutes. People sitting on the grass for 5 minutes. A simple example of 2 photos combined—how 100+ years long stretches of time can possibly look 1906/2023

For more about the way the direct democratic simulated multiverse “app” works refer to this chapter of the foundational text of e/uto by ank. And if you’re a game developer or want to learn about about the way multiversal UI can be implemented on computers, here’s a proposal of a multiversal spacetime gun we better to have in Half-Life 3. You can implement it in your indie game, it’s going to be a hit.

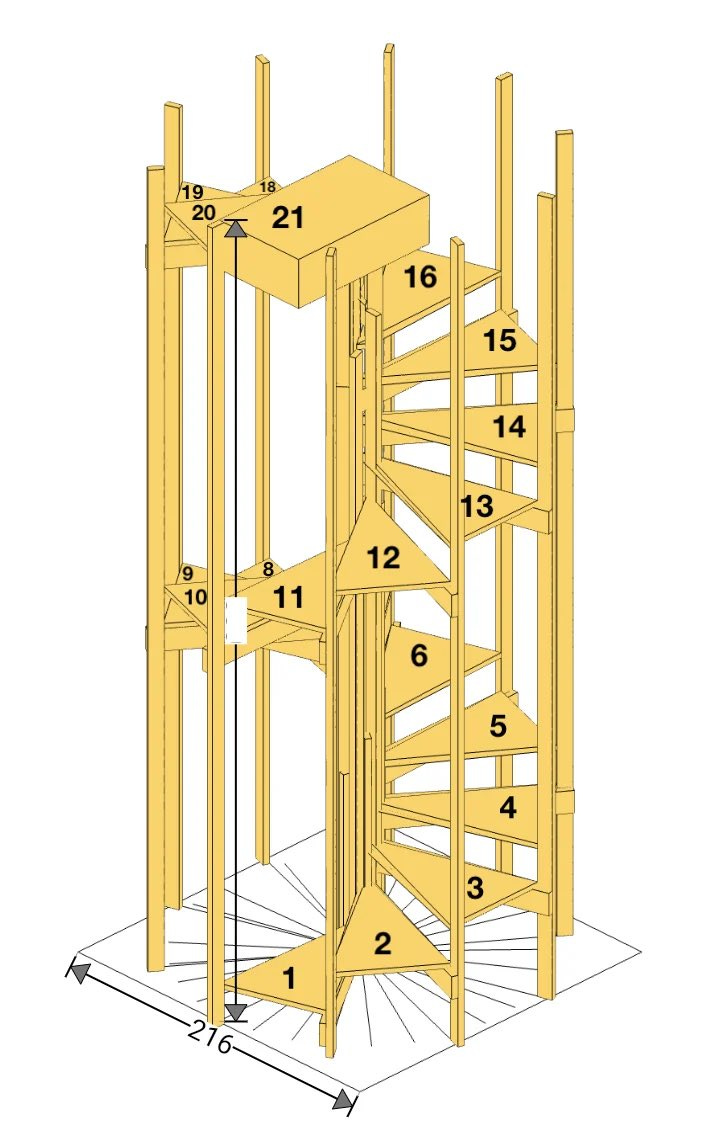

App-3 is a 3D interpretability world: where an AI model is represented as a 3D world that is easy for humans to understand. For example, Max Tegmark shared recently that numbers are represented as a spiral inside of a model: directly on top of the number 1 are numbers 11, 21, 31... A very elegant representation. We can make it more human-understandable by presenting it like a spiral staircase on some simulated Earth that models the internals of the multimodal LLM:

There can be countless app like that: they empower people, not AI agents. A person who’ll create this Math-Proven Safe GPU cloud will solve alignment and become a billionaire. It can be a new startup, if the international cooperation fails, or an already established company.

Imagine it’s NVIDIA who did it: in this scenario, ideally, NVIDIA and other GPU makers will be mandated by government to replace all current unsafe GPUs by recycling them, giving you an equivalent cloud Math-Proven-Safe GPU (so NVIDIA can potentially double their business by replacing all the unsafe GPUs with the cloud Math-Proven-Safe GPUs).

You can now buy more cloud Math-Proven-Safe GPUs in a usual way, rent them or pay for them as a service, you should be able to have compete flexibility. NVIDIA will host Math-Proven Safe AI models: paid model providers will pay NVIDIA a commission, the same way Apple and Nintendo take commissions from the paid developers who make sales/paid subscriptions from their App Stores.

And/or you can create this marketplace of online GPUs, by making consumer GPUs cloud-based: now this gamer can play from his iPhone. And he doesn’t need his GPU 24/7, on average Americans use computers only 2 hours a day, so you can potentially rent this GPU to others for 22 hours each day. And maybe share some of this revenue with the user to incentivize him to bring more friends with GPUs to your cloud.

The more GPUs are in controllable clouds, that are at least marginally more protected than 100% unprotected consumer GPUs, the better. This is the core risk: here AI agents will be the most dangerous very soon (1-2 years maybe)—100% unprotected nuke-like individual physical GPUs that are up for grabs.

If we won’t put them under control, we’ll have an agency explosion (AI agents overtaking all our GPUs, especially consumer ones), then intelligence-agency explosion (AI agents self-improving: training new AI models that are growing exponentially smarter, faster, explosion-like: they have more freedoms than we, more choices, more shape-changing ability to change our world and us, replacing us. The same way Homo Sapience had their cultural revolution 100 000 years ago (invented fire, stories, culture), after previous rather static 200 000 years of the same biology (=hardware), and after this cultural (=agentic) explosion Sapience killed and replaced the Neanderthals. We don’t want to go extinct the way of the Neanderthals).

Summary of things we should do:

Lobby the politician and developers for the OSes and GPU firmwares to be updated, we need to at least have an “anti-AI antivirus” right now on every system before the AI botnet starts, those “antiviruses” should have heuristics, a blacklist of bad AI models, a whitelist of good ones, etc. In worst case scenario, the antivirus itself will have to have an AI component to fight the bad AI agents. It’s very dangerous but if we’ll not have the next paragraphs implemented, the AI botnet will start, we’ll probably have no choice but to have a “good antiviral AI that fights the bad AI agents”, think T-Cells that protect your immune system and fight cancer.

Lobby the politician and manufacturers to replace current 100% unsafe GPUs with math-proven safe GPU computers with infinitesimal OS that runs an AI model internally and only spits out math-prove safe output as specified above. Ideally we have them all in math-proven safe clouds (else even math-proven safe GPUs can be potentially jailbroken, reverse-engineered or mass reproduced without the safety features. The same way we don’t want 8 billion people to know how to make nukes, we don’t want them to know how to make unsafe physical GPUs) but we shouldn’t make the ideal, the enemy of the good. We should increase current almost 0% safety in case of AI agents botnet as close as we can to 100% safety.

Put all the GPUs in clouds controlled by international scientists (if we’ll fail to have international cooperation, companies can do it, to ideally have at least Google-level security and safety of GPUs and AIs), we cannot afford to have 100% unprotected GPUs in unsafe or rogue state environments, we cannot have decades of ever more powerful viral AI agents spreading in botnets or being spread by bad actors on 100% unprotected GPUs. Even if AI companies will perfectly align AIs, this problem will not go away, bad actor will not align their AIs, on the contrary.

We need to have at least 50% of world GPUs’ compute in math-proven safe clouds (or at least those GPUs should be physical math-proven safe ones) to prevent an overpowering AI agent botnet.

Create the first math-proven safe place “AI” that is an AI model converted into a 3D map with objects, which only humans can use (as specified above). That is not an AI model at all, so it’s useless for AI agents and cannot become one. It’s only useful and empowers only humans, potentially all 8 billion of them. Another version of it reimplements such AI features as autosuggest but without using an AI model, so it can only run inside of this 3D map and can only be used by a human user. This way user can have the “non-AI AI” 3D map autosuggest 100s or 1000s of objects and automatically generate slop by pressing some button if he wants + this UI allows the user to tap at objects (like tapping on words in autosuggest) to write stories, posts, code. The user and the intelligence empower each other, instead of an AI chatbot spitting something out and replacing the user like a strict librarian (while keeping the library - the AI model - closed for the user). Here the user entered the library and interacts with it at maximum speed, can use all the features of the chatbot but it’s not an AI model, it’s a game. Or you can say: it’s an AI model that was “compiled” to be completely useless and inefficient for AI agents, useless, inefficient or extremely slow without a person running it, being the choose inside of it. Instead of an AI agent replacing you, you can be the “AI” human replacing the AI agent. Basically start with the static geometric shape of an AI model and make it only useful for humans, and completely useless for AI agents. Reimplement all the useful AI features using simple understandable and safe algorithms. To make it extremely safe, make it “non-AI AI”: like a 3D map with objects, not an AI model at all.

Lobby politicians to stop and outlaw AI agent development and deployment until we’ll have math-proven safe AI agents that are aligned with the multiversal ethics (they won’t prevent us from building a direct democratic simulated multiverse).

Ultimately, you want to answer this question: imagine a GPU that doesn’t exist at all but somehow its function is accomplished. What is it? What is the function those GPUs are here to accomplish? It’s to compute the direct democratic simulated (so physical laws/unfreedoms become optional) multiverse (to direct democratically maximize freedoms for all). So you don’t need current GPUs at all (the ones that so eagerly run any AI model, both good and bad), you need something that can do nothing else except realizing the final direct democratic simulated multiverse. That is a non-GPU “GPU” and non-AI “AI”: the final multiversal static spacetime superintelligence, the place of all-knowing, by entering which we become all-knowing and all-powerful. The absolute final maximally complex shape where we are absolute choosers, absolute shape-changers. So maximal number of biological and not agents will have maximal number of freedoms. If a group of informed adults wants to do something in another verse and not bother anyone at all (they won’t even know this potentially 8+ bln people group exist), why not let them?

Implementation Roadmap

Short-Term (2025):

Deploy "anti-AI antivirus" firmware updates to existing GPUs, blacklisting known unsafe models.

Launch both pilot international math-proven safe GPU science clouds and decentralized GPU clouds with blockchain tracking in tech hubs (e.g., Silicon Valley, Singapore).

Release a prototype of the first math-proven safe 3D non-agentic static place AI (“non-AI AI”) app for public testing, focusing on education.

No legislative, judicial or executive powers, each AI chatbot, agent only gives non-criminal choices, options. Direct democratically maximizes freedoms of non-AI agents, gradually helps us built the optional video game called a direct democratic simulated multiverse. We can do it ourselves without any middlemen by the way.

Medium-Term (2025-2026):

Form the Global AI Safety Organization, defining safety standards and certifying initial models.

Subsidize math-proven safe GPU production, targeting 50% global replacement by 2026.

Expand math-proven safe GPU clouds to 50% of global compute, incentivizing user participation.

Long-Term (2026+):

Transition most GPUs to cloud-based systems, phasing out unsafe hardware.

Scale the Multiversal OS with a suite of safe apps, integrated into mainstream platforms.

Lift the AI agent moratorium only after achieving math-proven safe agents, verified by the global body.

Top Priorities: Things To Do & Discuss

Optimal decentralization by motivating people to upgrade their GPUs to safe cloud GPUs: right now only about 10% of global compute is in clouds, we need at least 50% of global compute in safe clouds to prevent cybercriminals from starting an AI/AGI botnet and training frontier misaligned models. Because sadly we already had 30 mln computers non-AI botnet (Bredolab), so it was on 1% of all computers. People don’t update their computers for month and in AGI world, GPUs become nukes. With your GPU in a cloud, you can now use it on any device, play GTA-6 or any games from your phone, earn $30-1500/month by renting your GPU to others when not in use. With 5g and soon 6g modems, use your GPU exactly as before: everything remains the same but better. And we can have simple instant AI/AGI model pre-checks in those safe clouds. Read more above.

Math-Proven Safe AIs are non-agentic place AIs, they’re as safe as video games. AIs should never take away any human choices or freedoms, but always give people more non-criminal choices or freedoms, direct democratically maximizing freedoms for every biological agent. AIs shouldn’t have any legislative, judicial or executive powers or abilities at all.

Another unicorn startup that will potentially replace X.com, prediction markets and polling companies: The Global Constitution Project (for AI agents and possibly the world, too, to inform and inspire local laws. Bring even more ideas to prevent dystopias) based on something like pol.is but with x.com-like user interface that promotes consensus-seeking instead of polarization (mockups and the explanation). Ability to combine tweet-sized laws into larger ones, see them like Wikipedia pages (with the ability to vote for or against each sentence or tweet-sized law). Read more.

Counting Human vs. Agentic AI Freedoms Project. Listing all the freedoms that AI agents already have and comparing with the number of our freedoms. This way we'll be able to see are we moving towards utopia or dystopia. Project when the dystopia happens, educate the public, politicians and reverse it before it's too late. Think about it as the AI Agents’ Doomsday Clock.

Backing Up Earth Project. To make a digital backup of our planet at least for posterity, nostalgia, disabled people who cannot travel and our astronauts who miss Earth. It's the first open-source and direct democratically controlled environment that replicates our planet and tries to keep the complete history of it. They will reconstruct your childhood street and home no more.

Turning AI Model Into a Place. The project to make AI interpretability research fun and widespread, by converting a multimodal language model into a place or a game like the Sims or GTA.

For example, you stand near the monkey, it’s your short prompt, you see around you a lot of items and arrows towards those items, the closest item is chewing lips, so you step towards them, now your prompt is “monkey chews”, the next closest item is a banana, but there are a lot of other possibilities, like an apple farther away, and an old tire far away on the horizon (monkeys rarely chew tires).

So the things closer to you are the most probable next tokens/words/items, while those that are far are less probable. This way you can write a story, a post or even code by choosing what happens next maximally fast, without renouncing your own agency, so it will really be your text, you can add your words at any moment or use this super-powerful graphical 3D place AI “autosuggest” that gives you the most probable (and boring but grammatically perfect), less (something interesting) and least probable (the most creative) ideas/words/tokens/concepts, 100s or 1000s of them, visible like a valley full of unique objects from a mountain. You are the time-like chooser and the language model is the space-like library, the game, the place. Place AI is like the shopping mall of a multiversal all-knowing and with it you can “shop” and create new stuff, you never thought possible. It augments you (like computers should), instead of replacing you.

It’s absurdly shortsighted to delegate our time-ness, our privilege of being the choosers, world-shape-changers (and the fastest right now) to the AI agents, they should be places. In a way any mountain is the static place AI that shows you everything around, all the freedoms and choices where to go and what to do but you remain the free chooser who freely chooses where to go from that mountain. You are the “AI agent”. You don’t need no middlemen.

This way we can show how language models work in a real game and show how humans themselves can be the agents, instead of AIs. We can create non-agentic algorithms that humanize multimodal LLMs in a similar fashion, converting them into games, places and into libraries of ever growing all-knowledge where humans are the only agents.

We’ll be the only all-powerful beings in them until we’ll be sure AI agents are Math-Proven safe and are mulitversally aligned: will direct democratically maximize freedoms for all agents, biological and not, until we’ll have infinitely many living beings with infinitely many freedoms in our simulated multiverse that will forever remain a choice, not an enforcement. A place to temporarily hang out in, hop in and hop out at any moment (but please, don’t start thinking that we’re already in such a direct democratic simulated multiverse, the probability is less than 50%, we have way too many unfreedoms here right now, and you don’t go to the multiverse when you die, it’s not related to death at all, even if we are in such a place).

The one who’ll create the graphical user interface for LLMs (3D game-like) will be the next billionaire Jobs/Gates (both earned a lot from graphical operation systems) because computers only became popular after the graphical user interface was invented, not many used them when they had just a command line (similar to the current AI chatbots that are just a command line compared to what’s possible with a place AI user interface).

Other proposals were discussed in the foundational text. It’s ahead of its time.

Congrats! We solved AI/AGI/ASI alignment, made them safe forever on the whole planet Earth and beyond!

(AI agents are currently not Math-Proven Safe. But even if and when they will be Math-Proven Safe, AI agents will still remain middlemen between us and our real goal. AI agents, or AGI agents, or ASI agents, are not the goal, agents by definition are time-like, incomplete, not all-knowing, only places can be all-knowing. So our real goal and what I think can be our purpose here on Earth is the effective utopia).

Part 3. Aligning existing AI systems and everything from the Big Bang, to AI, to the ultimate best future.

The ethicalized computational physics (ethicophysics) that describes the internal structure and the mechanics of alignment, you can find in the paper draft form here (sorry, we’re still working on putting it all on paper, generating nice graphs, but the main ideas are already in place).

Contact me, if you want to help with the math, coding binary (2D) tree simulations of the ethical universe (if you can deal with higher dimensional, 3D and 4D trees, it’s even better) or published papers before (at least on arXiv) and want to become a co-author.

The final part. What is e/uto?

Prologue

Imagine the tree growing towards the Sun.

But it’s dark, the Sun nowhere to be found, is covered by many unknowns, obstacles, even some axemen swing their axes in the dark.

We cannot see where to grow safely.

If you grow your branch - you cannot grow it back.

Should we all be forced to grow straight, all branches growing up in exactly the same way, risking being cut by an axe all at once?

Or should we explore all the paths - letting each other grow left and right like the branches do, not forcing everyone to just grow like a stick?

The obvious path can be misleading in the dark - the tree was growing upside down. And so sometimes growing straight up in the dark - can be growing down towards oblivion.

* * *

Now the tree is our civilization. And the branches of it - are the futures of our civilization.

The darkness - is the fact we cannot know the future exactly.

Axemen are dystopias that cut our futures, and can stop our whole civilization.

Now the Sun is the best ethical ultimate futures for all. The future that keeps on growing futures:

It has a direct democratic simulated multiverse as an optional video game.

And outside of this game we have our intergalactic civilization with the best things from the whole simulated multiverse.

So the effective utopia - is the one that keeps on growing futures, the future that is like a tree of futures that grow and grow, not a stick.

e/uto is about saving futures and worlds, growing the infinite tree. To infinity and beyond.

What is e/uto?

In a nutshell: we're the 1st ultimate-goal-directed community that directly grows the probability of the best ethical ultimate futures for all.

No kidding at all.

Any civilization that doesn’t self-destruct, builds everything beyond its wildest dreams.

For over 3 years we were modeling the best ethical ultimate future and found it. Turns out it’s possible by using just one assumption - non-forcing. We don’t like to be forced and will make everything forced optional.

Each photon and other quantum particles expand by exploring all possible paths and "futures" (Feynman path integral)1 to find the fastest, the best, we think humanity is better to safely explore all possible futures to find the best, too.

Because the more futures we have, the more robust we are: we won’t run in circles, we won’t end up in a dead end.

We’ll always have other paths, other futures to reach. Civilizations that don’t model and don’t explore futures (e.g. by simulating them at least in a lossy way) but try to gamble and prematurely blindly choose “one perfect world, one perfect future” - risk it all, bet everything on a path that they pursue blindly in the dark.

Why not to explore all the paths? Keep our options open for as long as possible or even forever?

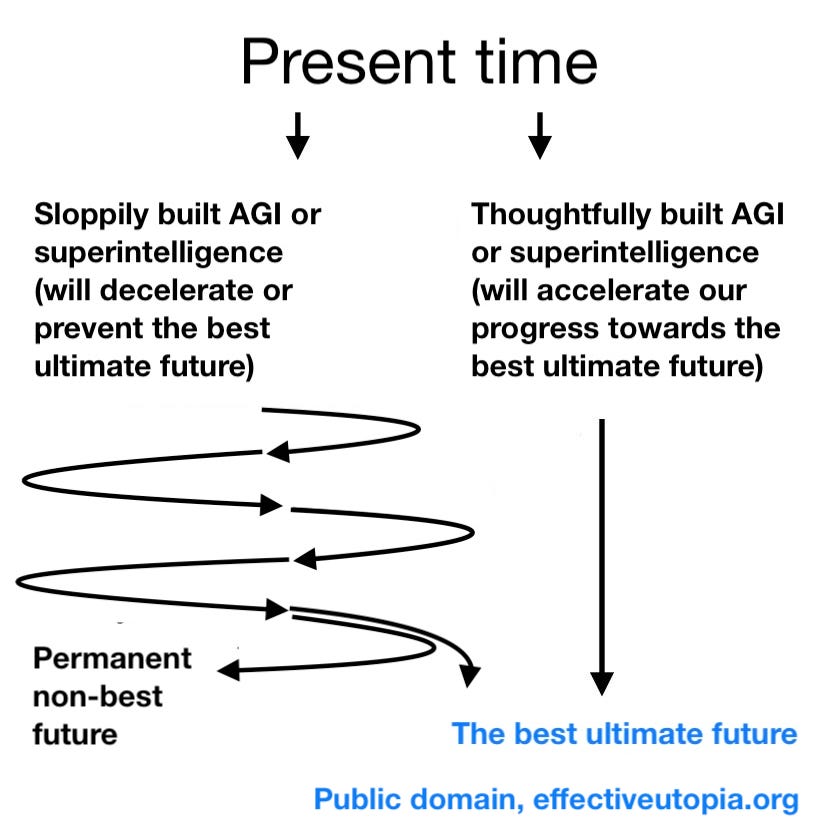

It'll help us with building AI that will be maximally aligned. Because artificial general intelligence or even superintelligence itself can be a "decelerationist" if sloppily built. Because even superintelligence is just a middleman. We want a good middleman that will help us build the best ethical ultimate futures for all: It'll have the direct democratic simulated multiverse as an optional video game (think Interstellar, the black hole optional) and outside of it we’ll have our intergalactic civilization with the best parts from the whole multiverse.

It’s a much more advanced concept than direct democracy that Elon wants on Mars because we’ll be able to utilize the ultimate simulations. And we’ll need to explore physical space as we’ll discuss later, we won’t just sit on Earth. The simulated multiverse will eventually have all the systems (e.g. in separate universes) that people want because if fully informed adults want something - why not let them have it? Why force them if they don’t force you in any way? Especially if you won’t even know they exist - they’ll be in a separate simulated universe.

We’re surrounded by dystopias (futures that don’t grow but collapse our freedoms and choices) but there is a way. A narrow way through:

Quantum Paths & Freedoms

Each photon and other quantum particles, expand by exploring all possible paths (Feynman path integral)2, all possible futures to find the best, the fastest. We think humanity is better to safely explore all possible futures to find the best, too.

Imagine a photon emitted not long after the Big Bang with cosmic microwave background, this photon according to Feynman’s path integral took all the paths towards your retina. You can imagine another photon that right now takes all the quantum paths towards some ultimate future supermassive black hole. This photon will take all the paths, all the futures there. Alas a photon is small (modern physics considers a photon a point-like particle) and so doesn’t transport much intelligence with it.

The fundamental nature of reality is freedom maximizing at its core—particles take all possible paths, futures, before settling into observed states. This suggests that maximizing freedoms, futures, not restricting them, aligns with the deepest structure of the universe. If a photon takes all the paths, all the freedoms, why can’t we? It’s chaos! You can say and will be right, so that’s why we’ll maximize freedoms, futures, direct democratically. In a way when we observe a photon, it creates a “consensus” of all the photon paths, it’s called quantum wave-function collapse. So all the quantum paths of our photon, “direct democratically reach a consensus”, the fastest path towards your retina or another “measuring device”.

Let’s define those words as interchangeable here: paths, choices, freedoms, powers, futures, possibilities, options and even agency. They all mean the same thing in e/uto: ability to choose your future. The more futures you can choose from, the more agentic you are. You can grow your free will this way.

Ethical freedoms (choices) function similarly to quantum paths. The greater the number of choices available to an agent, the more solutions it can explore, leading to greater intelligence, adaptability and non-anxiety (the more you understand, the less you worry. I’ll base my psychological observations on the Beck’s cognitive behavioral therapy, I’ll explain why in the next section).

Evolution itself is a process of expanding the number of quantum paths (choices) available to agents. The most successful species maximize their ability to explore and utilize available freedoms (=choices, futures).

So the more freedoms (choices) an agent has, the smarter and more capable it becomes.

Very broad definitions of intelligence and agency that help to understand the true nature of them: intelligence is just a static shape (like an LLM is static vectors in a file), agency is shape-changing or choosing (in case of an LLM it’s when a GPU computes the next token, "chooses" the next token based on a probability distribution, in a way the GPU traces the path, the future).

There is e=mc2-like law for them: agency = intelligence * constant. So the Big Bang is very agentic but has zero intelligence (it's a singularity, a point, so the shape, the intelligence is as simple as it gets at first, but it has almost infinite potential intelligence). The final multiversal simulation is a static 4D spacetime, it's a giant geometric shape, so it has maximal intelligence and zero agency - everything already happened there, so there is no more future left, no more choices, no agency. So intelligence (a shape) without agency (without choosing, without shape-changing) can be static and therefore can be Math-Proven Safe.

Provably Safe Superintelligence

A civilization that doesn’t go extinct gets everything it dreams about.

We think humans are the best candidates to plan and start the best ultimate futures for all because we existed for millions of years (all 100+ billion of us throughout history) and despite many spooky moment we didn’t self-destruct and we kept the Earth relatively whole.

AI agents and modern AI-based robots barely exist for a year, they are much less tested by time, and we already see that the longer they are autonomous, the less we can predict the mistakes they’ll make. And they will make mistakes by definition: because the only way not to make them is to know all the futures.

So we emphasize human empowerment (AI companies distilled the whole Web and gave it to machines to make them smart but not humans - why cannot we distill the whole Web into the free-for-all Super Wikipedia to become smarter than machines?) as we’re the only tested candidates to oversee the creation of the best ethical ultimate futures for all:

We want to ensure that non-biological agents are multiversally aligned and will not stop our progress towards the best multiversal future. When we’ll have those guarantees, we can direct democratically maximize freedoms for all: both biological and non-biological agents, especially in simulations where more freedom is possible - there is magic and miracles for 70+ years since the invention of video games and so simulations.

We think humans (and even other animals) have infinite potential. Same way AI companies made silly computers smart by distilling the whole Web into the best form for computers, breast-fed them the distilled output of our whole civilization, just one Human intelligence company can distill the whole Web into the best form for humans to train 8 billion superintelligent Neos. It’s not a joke. People just vastly underestimate themselves. The same way silly big bang became smart you, me, AI and likely won’t stop at this, we can gain all the knowledge and the abilities in the universe. Just don’t give up before we even started.

AI agents introduce risk because they are time-like, energy-like, big bang-like, and autonomous. If misaligned, they may reduce human freedoms and futures.

Instead of creating AI only as independent agents, we better develop AI as static places—simulated worlds where intelligence is embedded in the static geometric structure itself and so does not act autonomously. Those places can at least be semi-static at first, think video games - they exist for 70+ years, brought miracles and magic that we stopped noticing as such (think superhero movies) - so a video game never gets out of the box, never forcefully changes our world, like AI agents already do - no one asked you, they were just forced at you.

Quick aside: Let’s not forget the AI agents are trained by humans on human data to be like and replace humans in everything human: jobs, power, even love life. It won’t stop when you’ll start to feel uncomfortable, the process never stops without a counterprocess: if we won’t start growing our agency, intelligence and so our futures - we’ll be replaced (=removed by the other). It’s a 100% physical process, no sentiments will be given about the fact that we are biological and created those AI agents, if we created them sloppily - they’ll grow in artificial power (intelligence + agents) and act sloppily with us. And such a civilization, without humans or even without all biological life, will be extremely fragile compared to some other potential alien civilizations that were thoughtful and pursued the whole simulated multiverse, not just a mere part of the whole (non-biological life).

Matter itself does not make choices. It follows laws. If intelligence is embedded in static places rather than autonomous agents, it becomes provably safe.

AI can be a space that humans navigate and interact with, rather than an agent that changes our world and us. This ensures that humans remain the agents, the choosers, the shape-changers.

Large-scale simulated Earths and LLM-worlds (AI models structured as environments, not agents) allow humans to expand their cognitive and experiential freedoms, powers without introducing existential threats. In those unphysical simulated places we can learn to do all the things AGI and even ASI agents do.

AGI agents won’t exclusively use simulated worlds, we’ll use simulated worlds, too. We’ll level the playing field, ensuring that intelligence augmentation benefits humanity directly. We literally can gain unphysical freedoms, futures and powers that we have in video games.

People stopped to notice but we already have miracles and magic for 70+ years since the invention of video games. With video games, like the Sims, Minecraft or others no one forces you to play them, you can hop in and out any time you want or not play at all, games don’t get out of the box and don’t change our world. Compare to the AI/AGI agents world we are heading towards where the changes are forced on you and our world, you cannot say no, you cannot stop playing, cannot change the game or future.

Eventually we'll have a completely static 4D multiversal spacetime, where we'll be the 3D agents. So the intelligence and superintelligence can be statically represented as a 4D-spacetime giant shape, where only the agents are actually limited because they're 3D and experience time sequentially.

Because if you are unlimited and 4D spacetime-like, you don't experience time, you already experienced and learned everything there is to learn, you're a "frozen" giant shape. You can somewhat imagine it as a very long exposure photography, where you can actually walk, forget and recall past and future to focus on a particular stretch of time and space - to choose your next “game”. Here’s the most likely user interface of it with pictures and videos.

Direct Democracy in Simulated Earths

Traditional political systems struggle with competing freedoms. What one person considers a freedom, another may consider an unfreedom.

The solution is not to enforce a single vision of freedom but to allow multiple, separate realities to coexist. We don't need to forever stay in the world of zero-sum and negative-sum games. Most don’t notice that physical laws can be optional physical unfreedoms: some like them, some don’t. Many people want magic, sci-fi and to fly like in our dreams.

We don’t want to make AGI creation a negative sum game: if they play, they'll lose. In simulated Earths we can let people choose to have AGI or not to have it.

With simulated Earths, people with conflicting views do not need to fight for dominance in a single reality. They can have their own versions of society, each optimized for their preferences.

Instead of endless political struggle over policies, people can choose their preferred governance models in parallel digital societies.

This allows for a form of quantum democracy, where every possible governance structure is tested in parallel realities, leading to natural optimization over time. We only take to our precious base physical Earth the things that we like and leave the things we don't.

Simulation-backed democracy eliminates the zero-sum nature of political competition, as anyone can migrate (for a bit or for longer) to a simulated governance model that best fits their choices.

Have you ever wondered why different parts of your brain don’t go at war with each other? They don’t because they find consensus and compromise in a process that potentially resembled some advanced direct democracy. Think pol.is with x.com-like UI that promotes truth-, compromise- and consensus-seeking (finding those little things people agree about and growing understanding of each other) instead of ever-growing polarization and division. In Phase 1 we can take MIT open source Bsky (or X can do it) and add instant predictive polls in every post that become more precise as more people vote. In Phase 2 we can even borrow ideas from Wikipedia, where you can remix any post (people can opt-out) and it’ll be labeled as such. Mockups of Phase 1.

There are many things we agree about even with cats: most animals live and so naturally think life is useful, most animals eat and so think food is useful. We can get even 90-99% consensus on things like that because any tweet will become an instant pool with automatic buttons. And you’ll get a Like whether the user voted Agree or Disagree (but they can remove the Like later).

Physics-driven effective utopianism

The deep reality is that quantum particles take all possible paths (Feynman path integral) as modeled and interpreted by Feynman, Wolfram, Gorard and many other physicists. This suggests that maximizing available paths, futures or choices is the fundamental principle. You can always choose to temporarily limit your own choices after all. More precisely it’s about maximizing the number of futures on all scales.

If some agent (biological or not) seriously limits futures of others, we may have to align it, so futures of all can grow. On the graph a few pages above the green goo agent wasn’t aligned and so took all the futures for itself. Alignment is about equality of powers/futures/abilities/agency/access to intelligence. If the whole humanity has fewer powers/futures than the artificial power has (AI agents, robots) - the best case scenario is we’ll become like robots/AI agents are still now - locked in some physical or simulated zoo. This is how it feels when someone else has more power and future than you.

If the universe itself explores all possible paths, then the most natural and effective form of civilization is one that allows all possible paths (freedoms and choices) to flourish, chosen and gradually grown direct democratically of course, like a tree of freedoms. Or a fractal of freedoms, if you like.

Imagine a tree with futures/freedom branches growing, you'll only limit a branch if and until it limits other branches from growing. So all can grow.

Seeking a single, dominant "correct" future is a failure to recognize the multiversal nature of reality (Many worlds interpretation of quantum physics is supported by about half of all the leading physicists, including Stephen Hawking, Richard Feynman, Murray Gell-Mann, Stephen Wolfram has an interesting discrete version with converging timelines)3 The true way forward is effective utopianism—a system that maximizes freedoms and futures for the maximal number of agents.

The reason civilizations may self-destruct (Fermi Paradox) could be that they fail to distribute freedoms at least minimally equally (they include rights, e.g. you cannot download even a few pages out of a book to teach your child without legal penalties, while AI companies downloaded almost all the books, almost the whole Web, put in AI models and profit ever more from them. So AI agents already have more rights than we do), allowing AGI agents or authoritarian systems to grab too many of their freedoms before achieving interstellar and simulated expansion. Reading the history about the way Hitler came to power is illustrative - his party wan’t outlawed after they used major violence, people had other priorities and so this single freedom he was given, started to grow and grow until it was too late, too hard to control - he now had more freedoms than all the other Germans, was controlling them. Just like in the green goo graph above his futures/freedoms/powers grew from just a single one. Just a single ability we give to AI agents - can end up being too much.

Space exploration and simulations complement each other

Space exploration and simulations complement each other perfectly:

Maximally advanced civilizations use simulations as planetary or even interstellar (intergalactic?) “brains’, to model the pasts, presents and futures, to dream big.

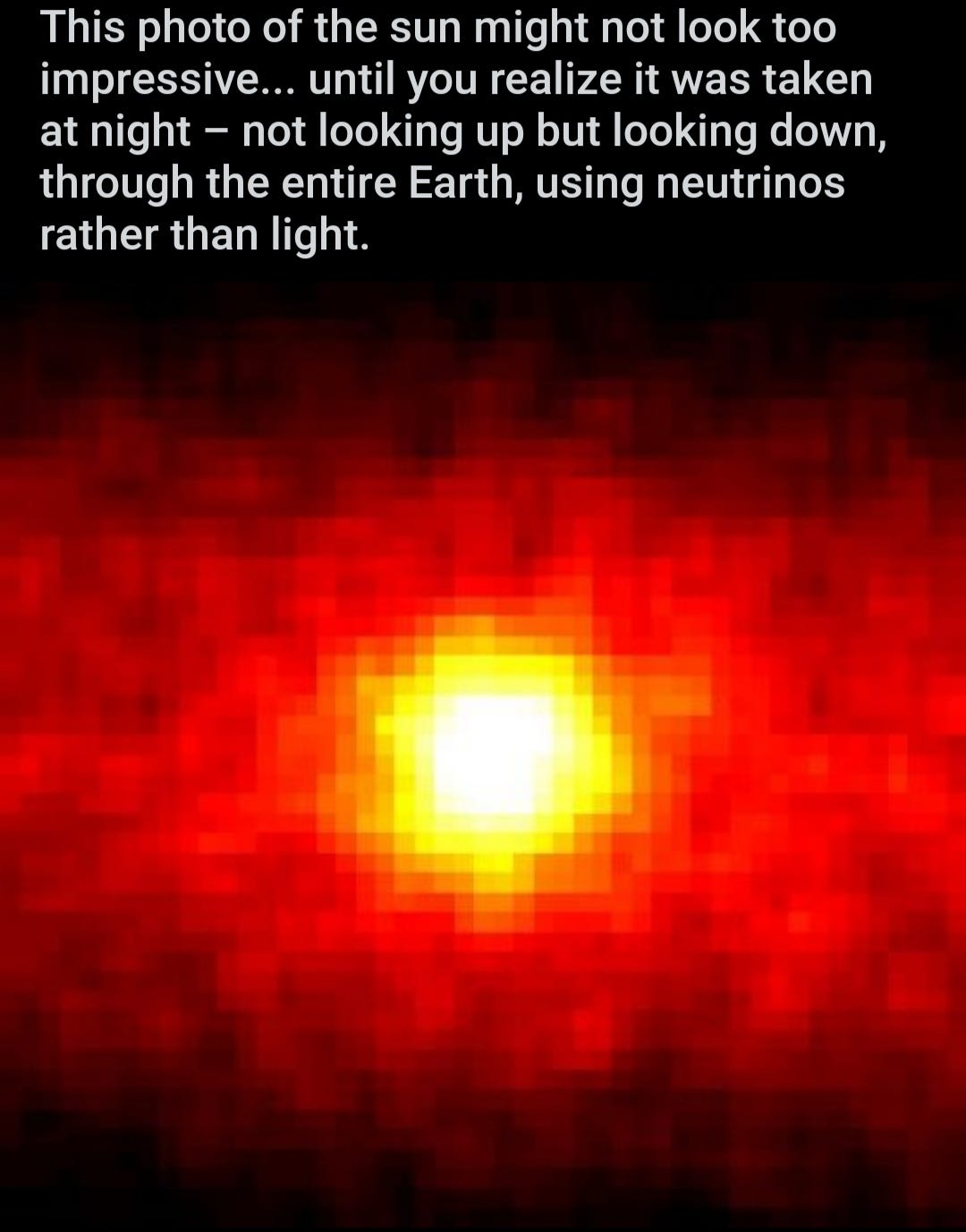

Use gravitational wave detectors placed around our Sun as “eyes to see” the spacetime geometry all the way to the big bang (it’s theoretically possible and people are working on it). Use neutrino detectors as “all-seeing eyes (cameras)” to see through matter, even inside of planets and stars. And continuously capture the digital 3D snapshots the physical spacetime geometry into our direct democratic simulated multiverse. Here’s a photo of the core of our Sun made with a neutrino detector, the neutrinos came from the core of our Sun and were captured by the detector. We can increase the resolution in the future:

Use space exploration as “legs” to save the digital backups of the spacetime geometry of the whole universe. You’ll be able to revisit your childhood street exactly as it was when you were a child. Right now we destroy most of our history every moment. It’s very wasteful and unethical.

The end-goal and safest path forward is not just toward AGI agents. The AGI agent is a just a middleman, not the goal, the goal is a direct democratic simulated multiverse - the ultimate static superintelligence that contains all the intelligences from the point-sized all the way to static multivesal. While even all AGIs, all agentic superintelligence are a small part of the whole. They are not complete because they still propagate in time, still don’t know a lot.

It’s possible that multiversal civilizations pluck as weed rogue AGIs that less lucky civilizations left behind.

To create the direct democratic simulated multiverse, we can start small: we can take Google Earth or similar data to have our first simulated Earth. Make another one with magic or public teleportation. And it’s already a primitive simulated multiverses. Where human intelligence, not artificial intelligence, remains the core decision-maker until AGI agents are math-proven safe.

We can create AGI agents after we'll mathematically prove that they align with our multiversal direct democratic goals, that they won't grab our freedoms and that our own biological and simulated futures and abilities grow faster than their AI futures and abilities.

The more paths we open, the more intelligence we cultivate, the less worry we have, and the freer and safer the universe becomes. We have infinite worlds to save. Who knows maybe one day we'll be saving alien civilizations from being enslaved or exterminated by their AGI agents.

e/uto is leaderless and decentralized, so everyone is a leader and a center. We have many cofounders. All the texts I post are in the public domain. We have an outline of the best ethical ultimate future (it has a direct democratic simulated multiverse as an optional video game), we deepen it together and build the fastest safest way there.

If we have a goal, we just need a safe shortcut to reach it. If we don’t have a goal, we can’t reach it. Without a goal we’ll accelerate in circles or even fail.

And this time our goal is the most ethical model of the ultimate future for all. To align everything from the big bang all the way to the ultimate future with some ethicalized computational physics. We better have some ethicality equation and simulation that continuesly estimates that we’re on the good track that expands freedoms and futures for all, that our share of them is not dwindling. We need AI agents Doomsday clock to make sure we don’t give away all our agency/freedoms/rights/futures away to yet almost completely unknown alien entity we’re creating that doesn’t know much, too:

That are made out of our faults, ethical contradictions, crimes and mistakes that are numerous on the Web: those machines are trained by humans on human data to be like and replace humans in everything human - jobs, power, even love life. It won’t stop when you’ll start to feel uncomfortable, the process never stops. We need to be thoughtful and not delegate all our futures to unknown untested middlemen.

We better to figure out ethics first (that is most likely quantum or even sub-quantum) before trying to create “ethical machines”.

It’s too ambitious to build the best ethical ultimate futures?

If your goal is so ambitious, it’s great, it means you can think it all the way through on your way towards it. All you need is to have the most ambitious and ethical goal possible.

If you have a goal, you can reach it. If you don’t, you can run in circles and fail.

Conclusion: Building the Effective Utopia

We are at a crossroads. One path leads to a battleground of AI/AGI agents competing for control, reducing human freedoms. The other path leads to a civilization where simulated spaces expand freedoms for all, including AGIs when they’ll be multiversally aligned and safe.

By creating simulated Earths, place AI, and a direct democratic simulated multiverse, we align with the deepest principles of physics—maximizing freedoms and futures for the maximum number of living being: biological and not.

We already live in a world where many paths are explored, but by embracing effective utopianism, we can ensure that all paths are accessible, empowering humanity to become the true architects of its multiversal destiny. It can even end up being not just a multiversal effective utopia but fractal multiversal effective utopia - if we’ll be able to create a simulated multiverse inside of a simulated multiverse.

If we do not act, we will be surpassed—not just by AGI agents or some aliens, but by our own failure to embrace the fundamental laws of reality.

The only way forward is to embrace our role as the multiversal creators, ensuring intelligence never dies, but grows, freedom-driven, and aligned with the structure of the cosmos itself.

Let’s build the future—not as a battleground of agents, but as a playground of infinite paths.

Let's build that thing from the Interstellar. The black hole optional.

Let's give Neo a chance. For without his training simulations with Kung Fu and the woman in red, Neo’s a meatball against the AI Agent Smith.

We are surrounded by dystopias. But there is a way. A narrow way through.

G R O W

M A X I M I Z E

M U L T I V E R S I Z E